|

Alishba Imran I’m a machine learning researcher at EvolutionaryScale (now Biohub), where I work on foundation models for biology. At UC Berkeley, I conducted research with the Arc Institute and Chan Zuckerberg Biohub, developing large-scale models that learn biologically interpretable representations of cells under genetic and chemical perturbations. I also worked with Pieter Abbeel’s group on protein language modeling. I am a co-author of the book Machine Learning for Robotics (Apress, Nature Springer). Prior to Berkeley, I worked on physics-based modeling and machine learning for battery materials discovery at Tesla under Dr. Matthew Murbach, and later founded Voltx, a machine learning and physics platform to accelerate battery development. Voltx raised a $1.3M pre-seed round and secured partnerships with major U.S. and European manufacturers. I’ve also contributed to AI research at Cruise and NVIDIA. My journey in machine learning began in high school at Hanson Robotics, where I co-led engineering under Dr. David Hanson, contributing to neuro-symbolic AI and open-source hardware for Sophia the Robot. I'm grateful to be supported by various communities who have supported my work and education: Masason Foundation, Interact Fellowship, Accel Scholars, and Emergent Ventures. During my time at Berkeley, I was involved with the external and research teams at Machine Learning at Berkeley. |

|

ResearchI'm broadly interested in AI, biology, and robotics research. Currently, I'm excited about single-cell perturbation models, multi-modal protein language models, and the interpretability of these methods. |

|

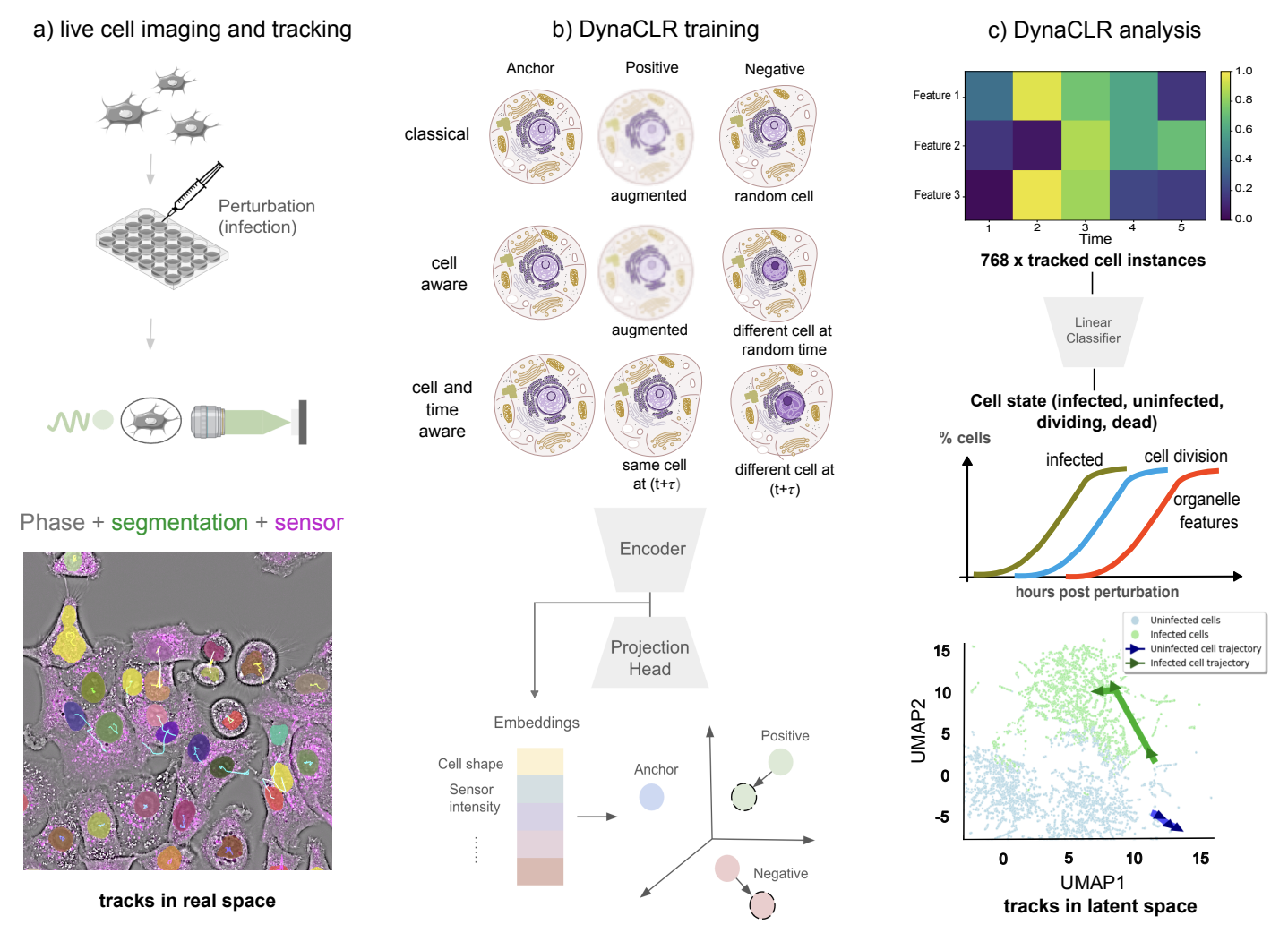

Contrastive learning of cell state dynamics in response to perturbations

Soorya Pradeep, Alishba Imran, Ziwen Liu, Taylla Milena Theodoro, Eduardo Hirata-Miyasaki, Ivan Ivanov, Madhura Bhave, Sudip Khadka, Hunter Woosley, Carolina Arias, Shalin B Mehta arXiv preprint, 2024/10/15 Under review at Cell Patterns Codebase / Visualization Tool We introduce DynaCLR, a self-supervised framework that uses contrastive learning to model dynamic cell states in response to perturbations from time-lapse imaging data. This method enhances cell state classification, clustering, and embedding across various perturbations such as infections, gene knockouts, and drug treatments. |

|

AI for Robotics

Alishba Imran and Keerthana Gopalakrishnan Published by Apress, Springer Nature, 2025 This textbook reimagines robotics through a deep learning lens, transforming decades-old robotics challenges into AI problems. It covers modern AI techniques for robot perception, control, and learning, and explores their applications in self-driving cars, industrial manipulation, and humanoid robots. The book concludes with insights into operations, infrastructure, safety, and the future of robotics in an era of large foundation models. |

|

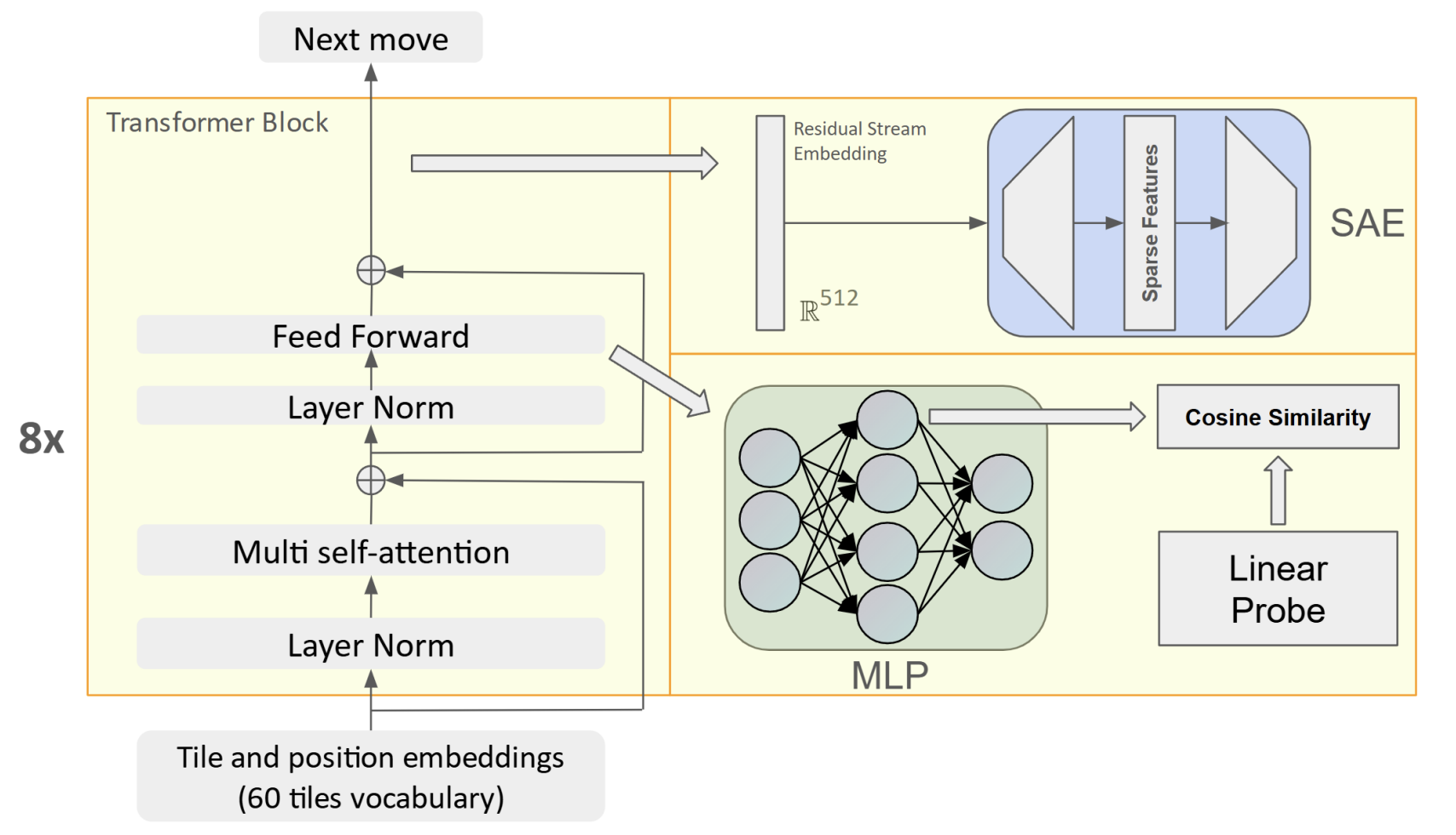

How GPT Learns Layer by Layer

Jason Du, Kelly Hong, Alishba Imran, Erfan Jahanparast, Mehdi Khfifi, Kaichun Qiao Author list is presented in alphabetical order. I was a co-lead on the project. arXiv:2501.07108 Large Language Models (LLMs) often struggle to build generalizable internal world models essential for adaptive decision-making in complex environments. Using OthelloGPT, a GPT-based model trained on Othello gameplay, we analyze how LLMs progressively learn meaningful game concepts through layer-wise representation. We find that Sparse Autoencoders (SAEs) offer more robust insights into these internal features compared to linear probes, providing a framework to better understand LLMs' learned representations. |

|

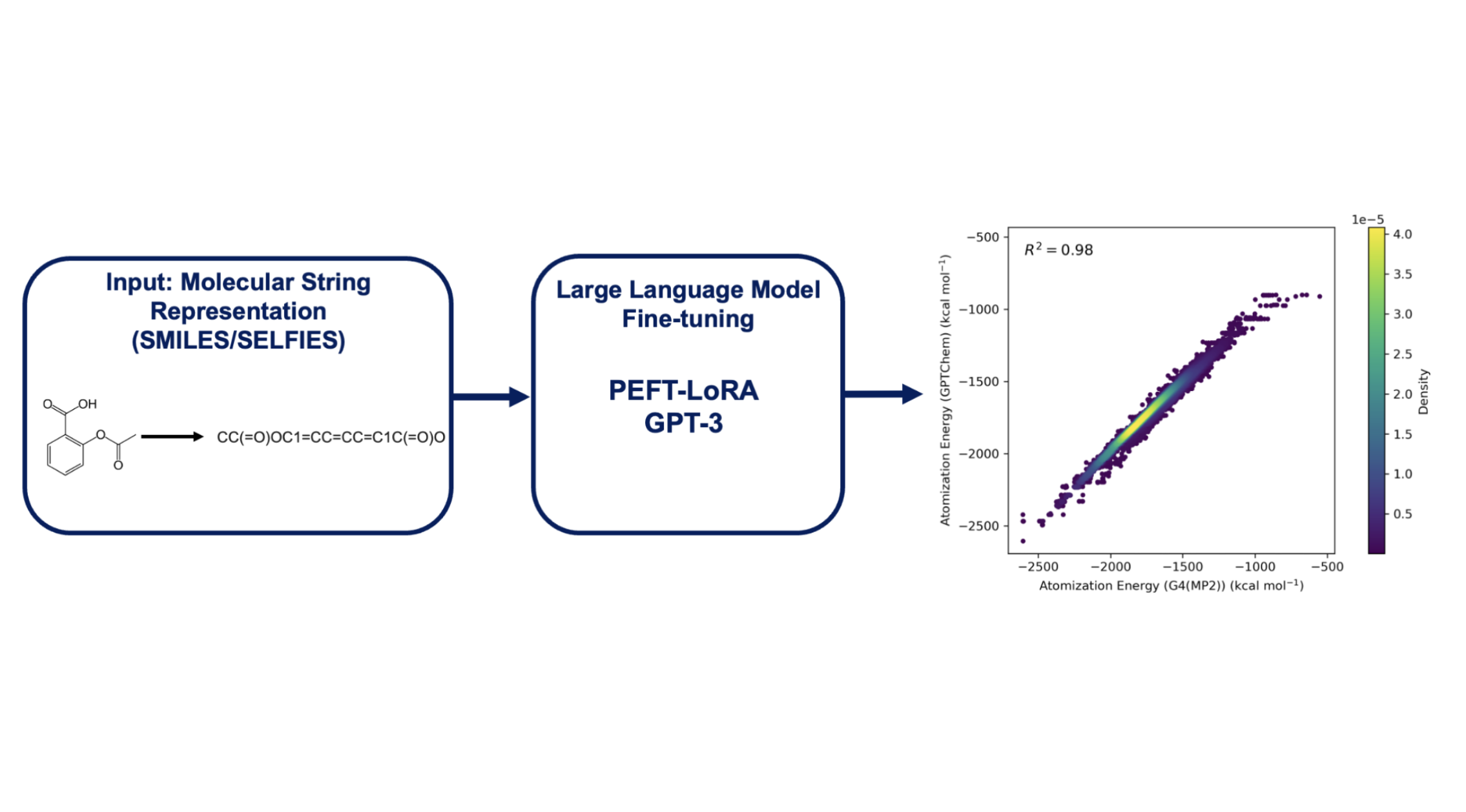

14 Examples of How LLMs Can Transform Materials Science and Chemistry

Kevin Maik Jablonka et al. Digital Discovery, 2023, 2, 1233-1250 We fine-tuned LLMs with the LIFT framework to predict atomization energies. We demonstrated that while LLMs based on string representations like SMILES and SELFIES achieved good performance (R² > 0.95), their predictions were still less accurate than models using 3D molecular information. By applying Δ-ML techniques, we achieved chemical accuracy, showcasing how established ML methods can be adapted for LLMs in chemistry. |

|

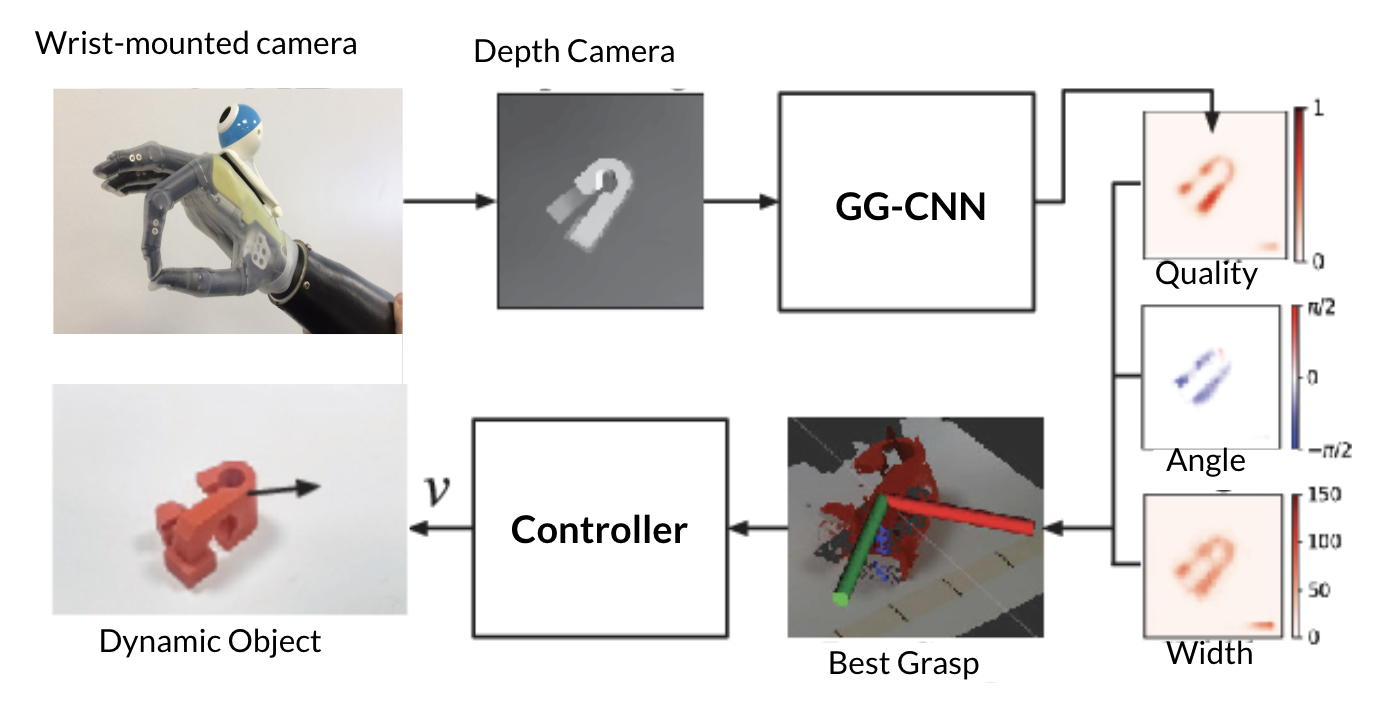

Design of an Affordable Prosthetic Arm Equipped With Deep Learning Vision-Based Manipulation

Alishba Imran, William Escobar, Fred Barez ASME International Mechanical Engineering Congress and Exposition (IMECE), Paper No: IMECE2021-68714 This paper outlines the design of a novel prosthetic arm that reduces the cost of prosthetics from $10,000 to $700. Equipped with a depth camera and a deep learning algorithm, the arm achieves a 78% grasp success rate on unseen objects, leveraging scalable off-policy reinforcement learning methods like deep deterministic policy gradient (DDPG). This work demonstrates significant advancements in making prosthetics more accessible and adaptable to real-world tasks. |

|

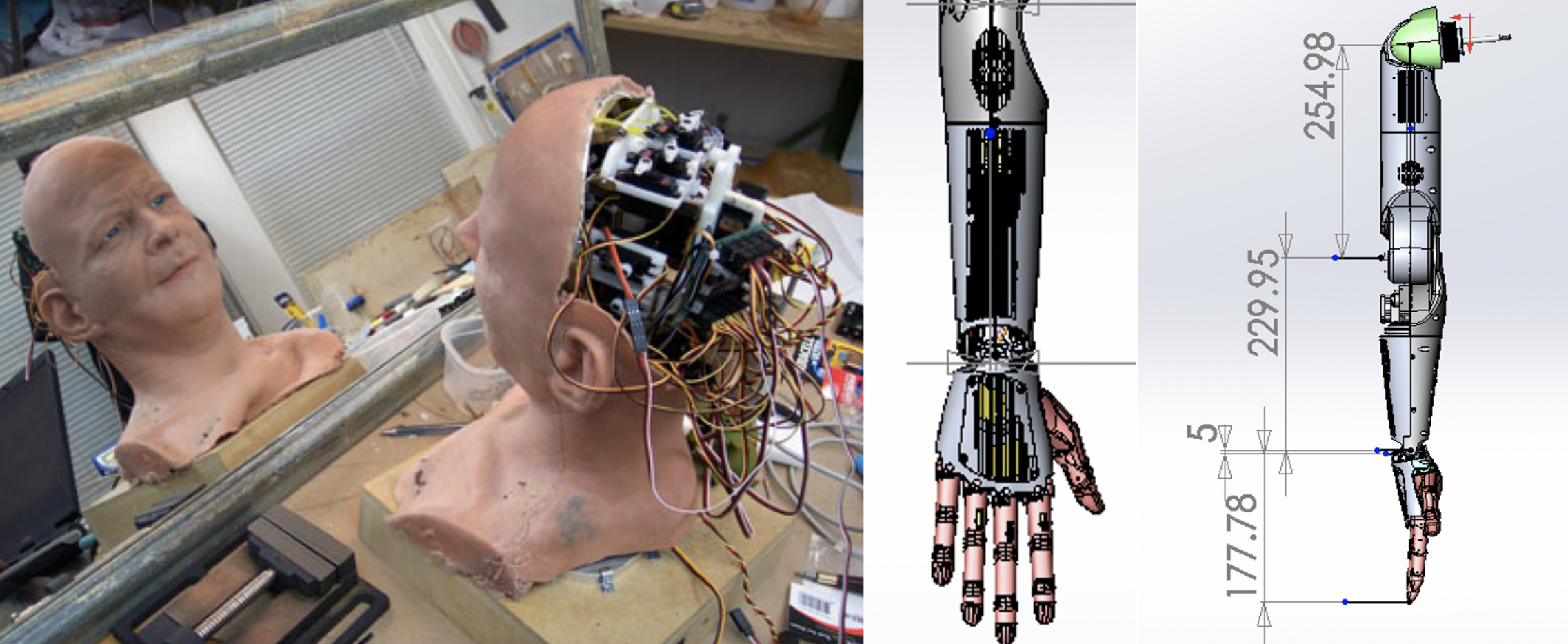

A Neuro-Symbolic Humanlike Arm Controller for Sophia the Robot

David Hanson, Alishba Imran, Abhinandan Vellanki, Sanjeew Kanagaraj Hanson Robotics Ltd This paper outlines the development of humanlike robotic arms with 28 degrees of freedom, touch sensors, and series elastic actuators. Combining machine perception, convolutional neural networks, and symbolic AI, the arms were modeled in Roodle, Gazebo, and Unity and integrated into Sophia 2020 for live games like Baccarat, rock-paper-scissors, handshaking, and drawing. The framework also supports ongoing research in human-AI hybrid telepresence through ANA Avatar Xprize, extending applications to arts, social robotics, and co-bot solutions. |

|

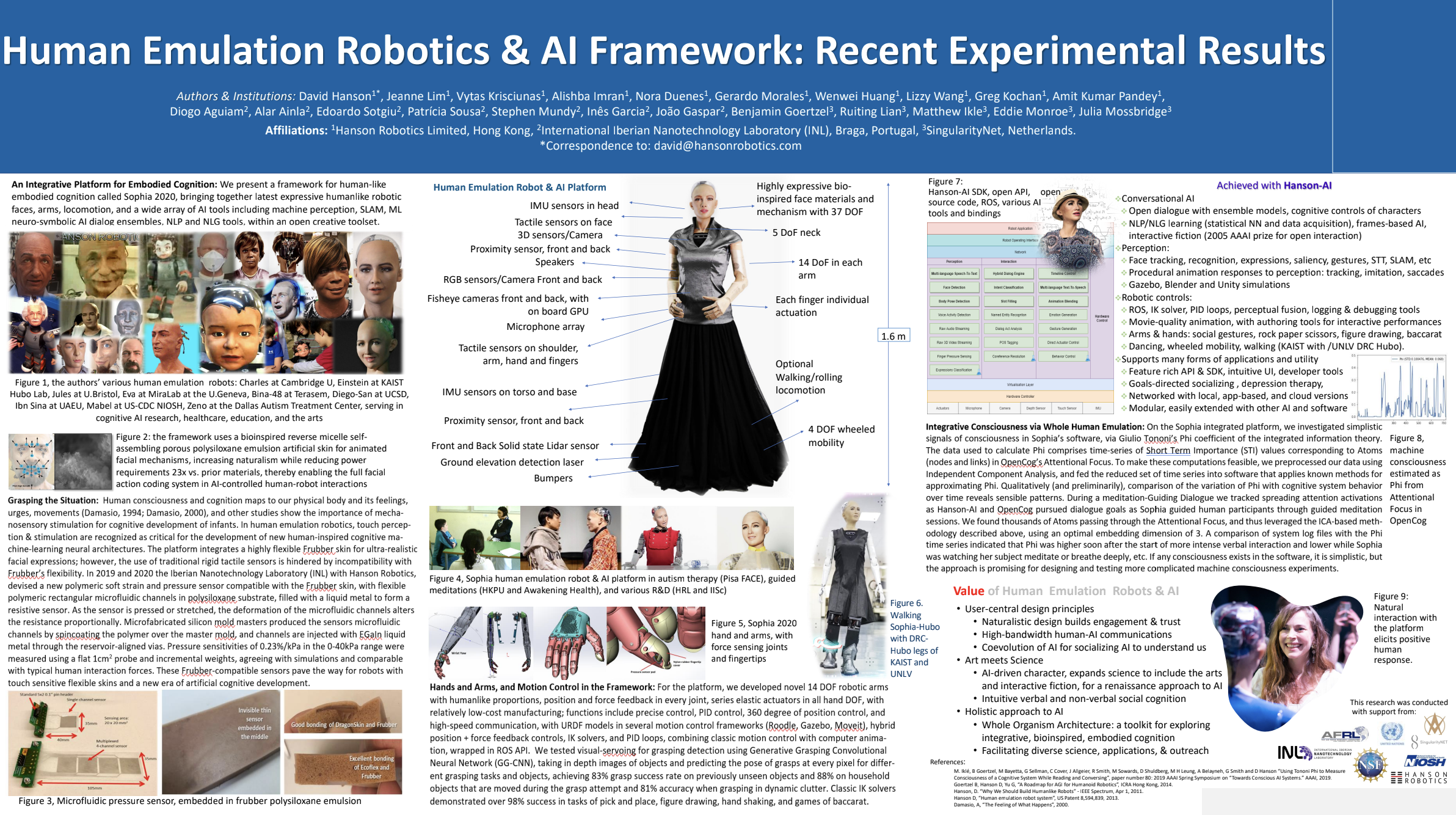

Human Emulation Robotics & AI Framework: Recent Experimental Results

David Hanson, Jeanne Lim, Vytas Krisciunas, Alishba Imran, Nora Duenes, Gerardo Morales, Wenwei Huang, Lizzy Wang, Greg Kochan, Amit Kumar Pandey, Diogo Aguiam, Alar Ainla, Edoardo Sotgiu, Patrícia Sousa, Stephen Mundy, Inês Garcia, João Gaspar, Benjamin Goertzel, Ruiting Lian, Matthew Ikle, Eddie Monroe, Julia Mossbridge Poster presented at the American Association for the Advancement of Science (AAAS) Annual Meeting, 2021 We present Sophia 2020, a framework for human-like embodied cognition. This integrative platform combines the latest in expressive human-like robotic faces, arms, locomotion, and AI tools, including machine perception, SLAM, neuro-symbolic AI and NLP. It provides a creative and open toolset for advancing embodied cognition research. |

|

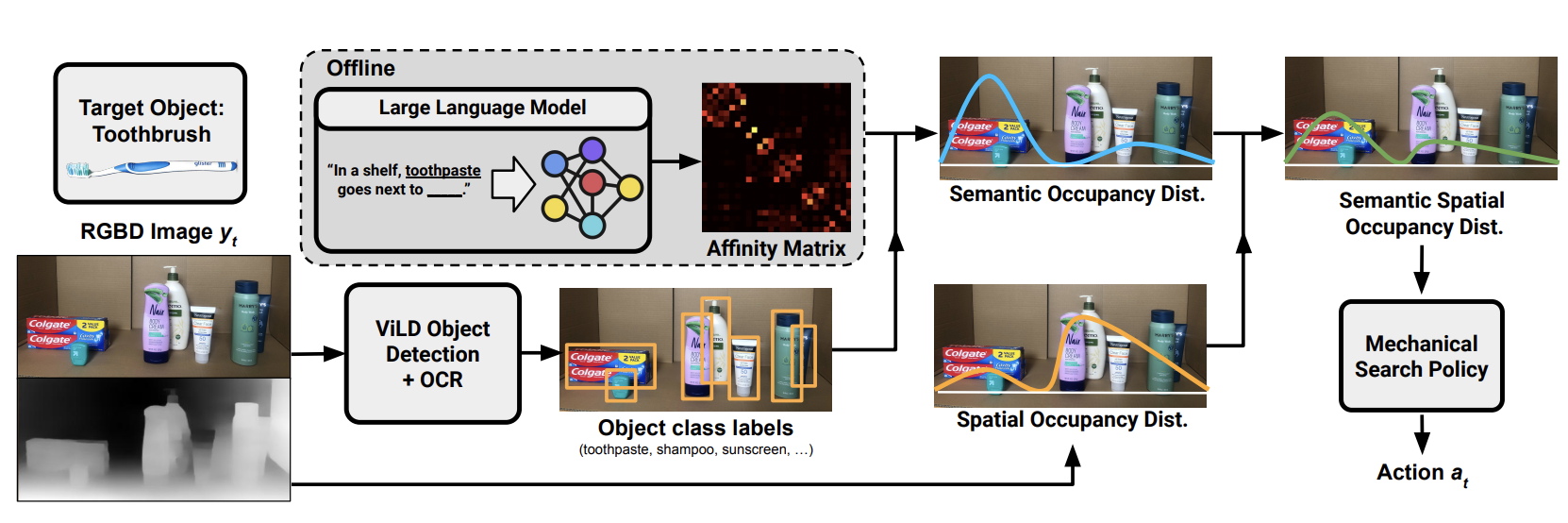

Semantic Spatial Search on Shelves (S^4)

Satvik Sharma*, Kaushik Shivakumar*, Huang Huang*, Ryan Hoque, Alishba Imran, Brian Ichter, Ken Goldberg arXiv preprint, 2023

S^4 uses large language models (LLMs) to guide robots in finding occluded objects on shelves by reasoning about semantic proximity. It combines OCR, ViLD object detection, and semantic refinement to accelerate search and improve object detection accuracy.

|

Awards & Public SpeakingMy work has been featured on Forbes, BBC, and I've spoken to over 40,000 people at various tech conferences such as Tedx, MWC, and CES. I was recently named Inno Under 25 by SF Business Times, Teen Vogue's 21 under 21, and Top 100 Most Powerful Women in Canada . |

InvestmentsI work with Kleiner Perkins as an investment scout. I've invested small cheques into various AI, bio, and hardware companies: |

Miscellanea |

|

|